The Potential and Limitations of AI Chatbots as Learning Assistants in Education

Can AI chatbots be helpful for education? The idea is intriguing, considering that most children worldwide have direct or indirect access to a smartphone. Imagine opening WhatsApp to find your personal tutor that can answer "all" your questions. This vision drives many pilot projects and digital learning platforms like Duolingo, the Khan Academy, and Squirrel, which I wrote about some time ago. Can the human-like conversation we are now getting used to from ChatGPT lead to such a personal tutor in your pocket?

The intricacy of learning is frequently underestimated when new AI initiatives are lauded.

Breaking Down the Potential and Challenges of AI Chatbots in Education

To understand the potential and challenges, one must break down the topic into different pieces. What subjects could they train on? A chatbot for highly standardized subjects like math or natural science differs from one teaching the interpretation of a novel. Although grammar rules of a language can be taught through quizzes and tests, online learning already contributes significantly to education. However, learning to deal with complex situations and scenarios, critical in many life and business situations, is very difficult to teach when communicating with a machine. No technology can replicate the engaging, interactive atmosphere created by a live instructor and learning in a group of students. Therefore AI chatbots can only be used for a limited set of subjects and tasks.

Where can these chatbots help? AI chatbots could be complementary for learning outside of school. Ideally, they could emulate the approach of great explanatory YouTube videos that simplify complex concepts, or follow the teaching style of the Khan Academy. ChatGPT can explain concepts from various angles and in simple or complex words. But this only happens if you know how to ask such a large language model, and then you cannot be sure it states the truth, which is particularly problematic when you are just learning the topic yourself.

Comparing Rules-Based Chatbots and Large Language Model Chatbots

Because chatbots can be built rule-based or now very open using large language models. Only rule-based chatbots give you control over the flow of teaching. This adaptive learning essentially cycles between explanation and exercise. But the explanation and exercises are static. They might have some variation in content and exercises, but they will quickly reach limits. The AI often predicts the learning level and what the next step or exercise would be best.

Large language models as personalized learning assistants have no limits in terms of explanations and exercises as they could create endless variations and different versions, which might or might not lead to better quality. However, as of 2024, it is not possible to create a controlled and risk-free chatbot that would assist a student in learning for the following reasons:

- Large language models (LLMs) excel due to their wide-ranging knowledge across various subjects. Restricting them to a single area, like Math, can reduce their effectiveness. Their human-like responses depend on this broad informational access, and limiting their scope compromises their utility. This challenge of balancing specialization with versatility is common in many fields where LLMs are applied.

- A large language model is not designed to guide a student through their learning journey or make crucial decisions about their educational path. It lacks the capability to actively manage or adapt a student's learning progression, which is essential for effective personalized education. This limitation exists for several good reasons, including the model's inability to understand individual learning needs and contexts fully.

- Unlike strictly rule-based learning steps, LLMs easily hallucinate, which would be a bad idea for people just learning the subject.

- Furthermore, AI models are heavily based on a few world languages and lack substantial amounts of information in other languages. Even if the content is translated, the model is often based on educational content only from selected parts of the world.

Study on the Impact of an AI-Tutor on Math in Ghana

These are just a few points illustrating the different approaches and outcomes that so-called AI chatbots as learning assistants could have. Testing and measuring such approaches is therefore key, and I was glad to see a recent study called "Effective and Scalable Math Support: Evidence on the Impact of an AITutor on Math Achievement in Ghana." The study analyzed the effects of an AI-powered chatbot tutor called Rori for math learning and concluded that the chatbot could indeed improve students' learning outcomes.

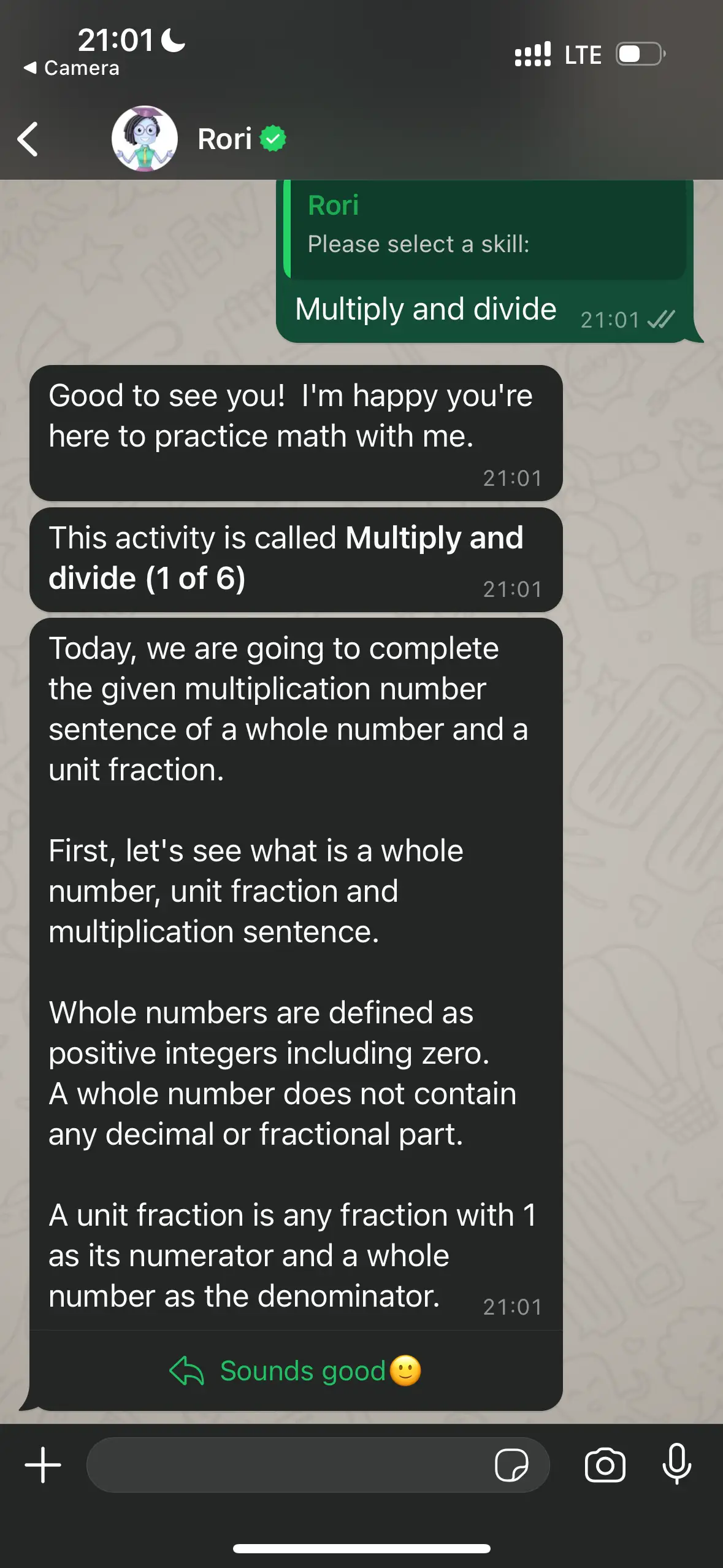

I took a test at Rori, which the organization behind it, Rising Academies, made possible. I spent 15 minutes with the chatbot, and it appeared to me very much a rules or step-based chatbot. There is a nice onboarding with some motivational remarks about learning in general, and then the students can choose between different areas of Maths. You can click down to a specific topic and then get for example the following explanation (see image)

This explanation is all I got before the first exercise. I couldn't ask for more information or different types of explanations. Then, the exercises started, which were very simple, but couldn't handle failures. I continuously input wrong results, and it just repeated the same exercise again and again without any further explanation or help. To be fair, it was only a short test, but it seems like either you understand the explanation, or you're lost. This is where I expect an educational chatbot to be more helpful - it should be able to react to your issues and find different ways to explain the same topic.

Positive about the study in Ghana is that they employed a randomized controlled trial and found, perhaps unsurprisingly, that some form of learning support tends to lead to better outcomes than none at all. However, the study then enters a realm of abstraction without offering a holistic view of the experiment or the learning context of the participants. The researchers measured a higher baseline increase for the chat-supported student. As I am not amazed by the chatbot, I also find the increase not amazing either.

A significant gap in the study is its lack of context about the students' backgrounds beyond their access challenges, and where such an app might be most effective. Additionally, the tests were conducted under observation, and the study provides no insight into the students' motivation to use the tool outside school settings. It's ironic that a digital tool is evaluated solely based on outcomes from a paper exam. If you use a digital tool in the first place, you might as well use all the digital metrics available from the tool to analyze its impact.

It reminds me of a recent article in The Economist that praised the possibility of AI chatbots for education, the magazine, which is also in favor of instructed learning material. And here lies the problem. Just because there are is a lack of teachers in many countries, we now shall hope for AI chatbots to solve a scarcity in the first place? Or is any information better than none, even if it has various flaws? One thing is sure: digital education has opened many doors for learning for everyone, be it formal Massive Open Online Course (MOOC) classes or informal great YouTube videos, but the "AI" in digital learning still needs many iterations for a truly amazing learning experience and won’t be the right partner to learn to deal with our growing complexity in our society for now.